Building Lecture Analytics at JISC's CAN Hackathon

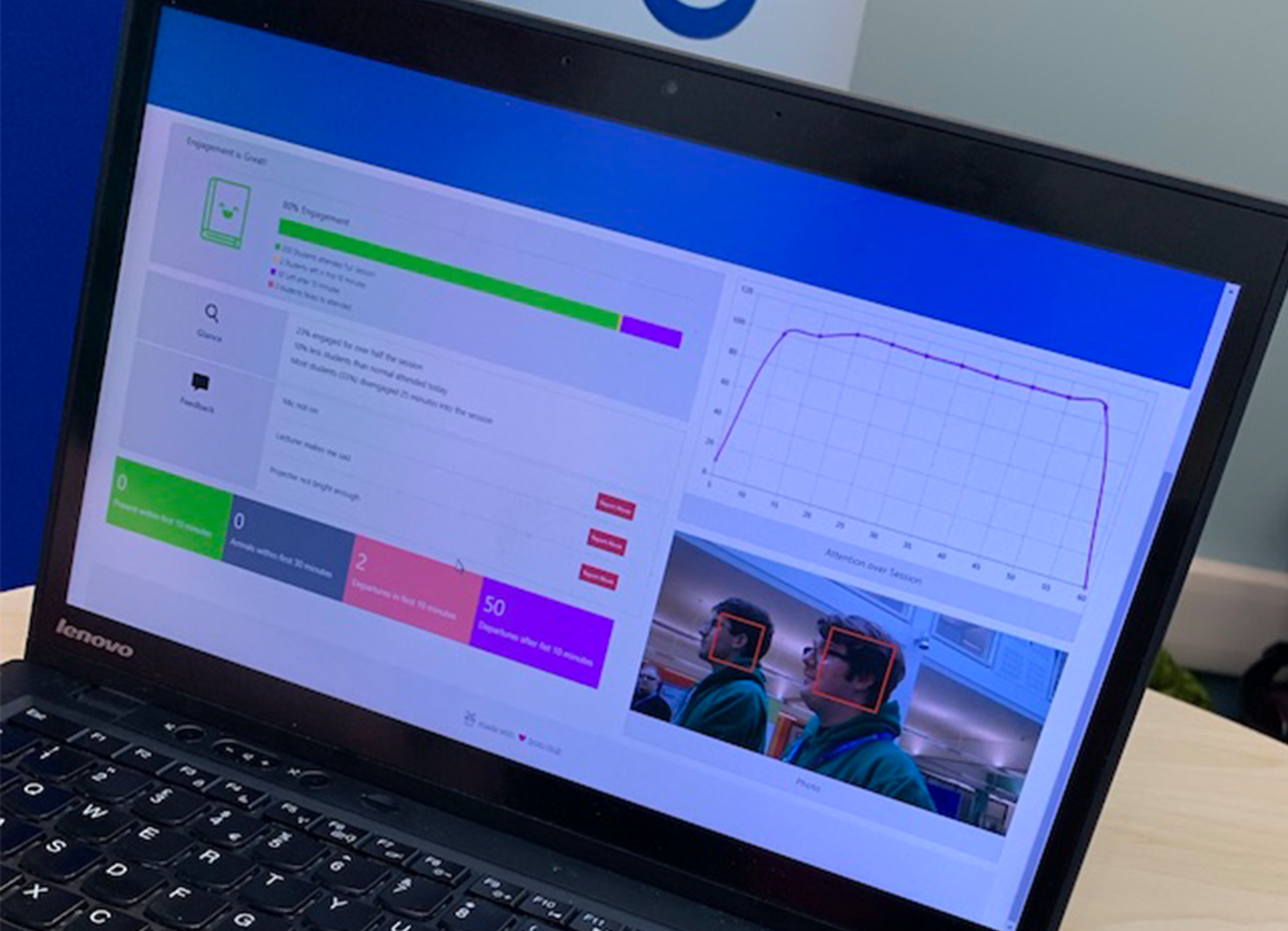

After our success at JISC’s DigiFest hackathon, this week, the team were invited back to Milton Keynes to work collaboratively with other groups. We also built a new product to help teaching staff to understand where students are engaging and why engagement could be dropping across lectures, by anonymously monitoring student faces in the lecture theatres to calculate student attentiveness and emotion.

This conference was about the Change Agents Network, a network of staff and students working in partnership to support curriculum enhancement and innovation across higher and further education globally. We decided to target our entry to this to create a product that would help staff to track if students were engaging with their lecture material, spot areas where attention dropped and get a better understanding of their audience.

Day 1: Travel

We started the hackathon heading down to the Open University campus in Milton Keynes, checking in at the hotel and heading straight out to tea at a local Indian restaurant where we met the teams that would be participating, found out where they came from and where their interests in tech were. The meal was great, and we then headed back to the hotel to start planning the code, building out mockups in Figma and delegating who was going to be doing what the following morning.

We also saw Uber Eats robots, and they’re super cool.

Day 2: Code

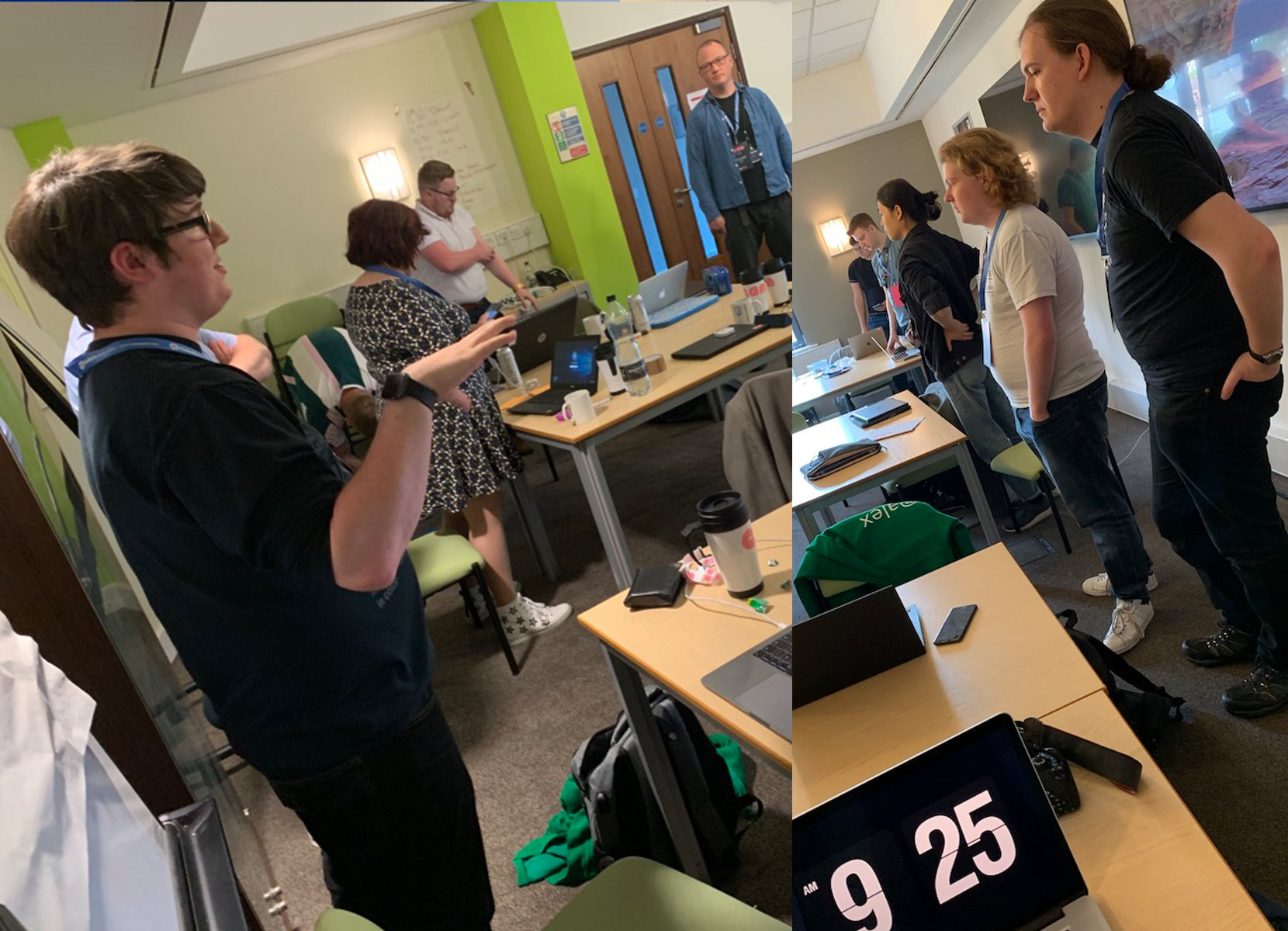

After heading down for breakfast, we headed out to to the event and start working, got our table and initialised the Git repository. We started with a stand-up where we told everyone what we were planning on building over the event and the technologies we planned to use to realise our ideas.

We started with the code implementation, Harry architected Entity Framework to manage our users and data objects, Alex worked on pulling images from our client application, processing them and returning them to the database and Dan worked on processing data through the app, building data models and our login system.

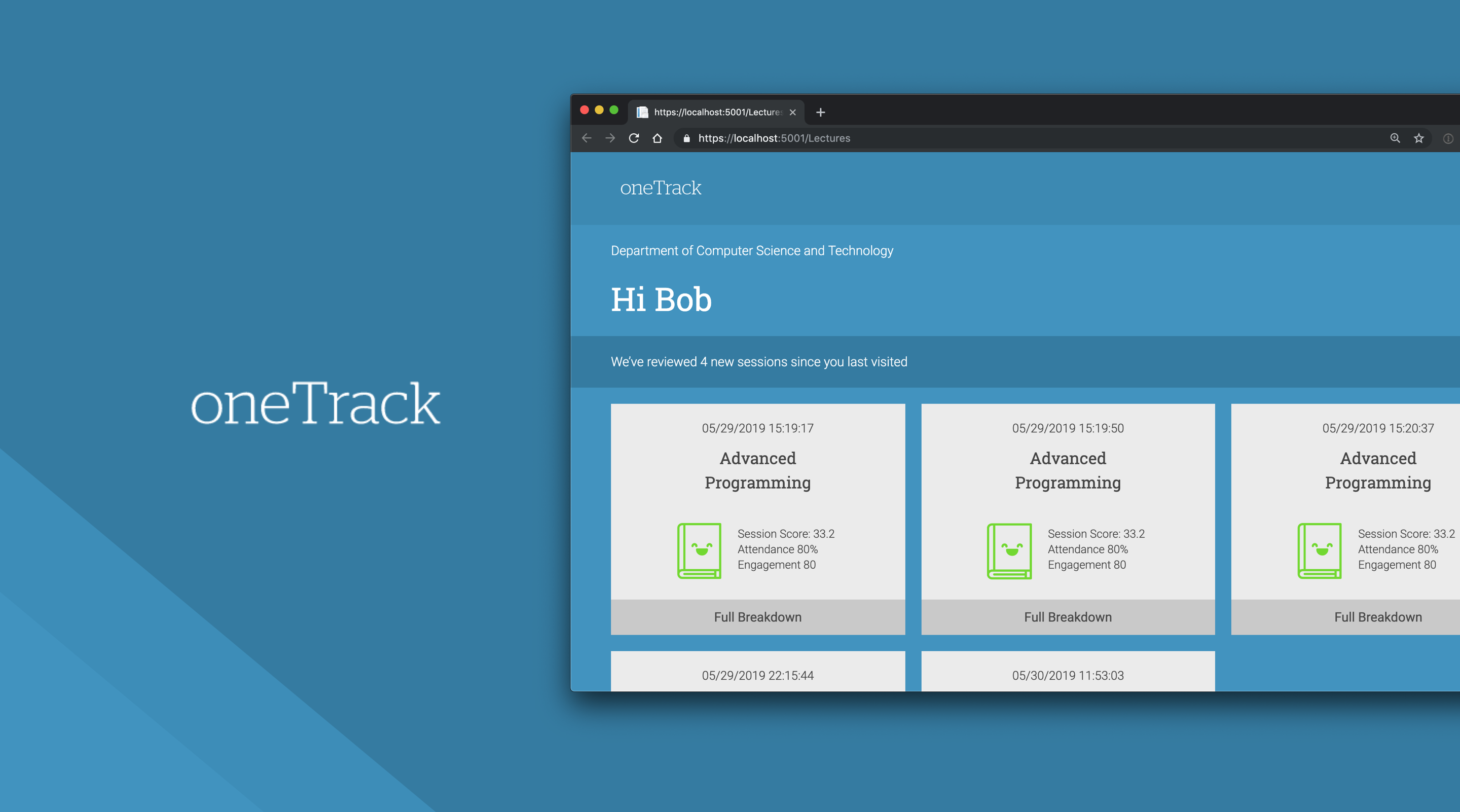

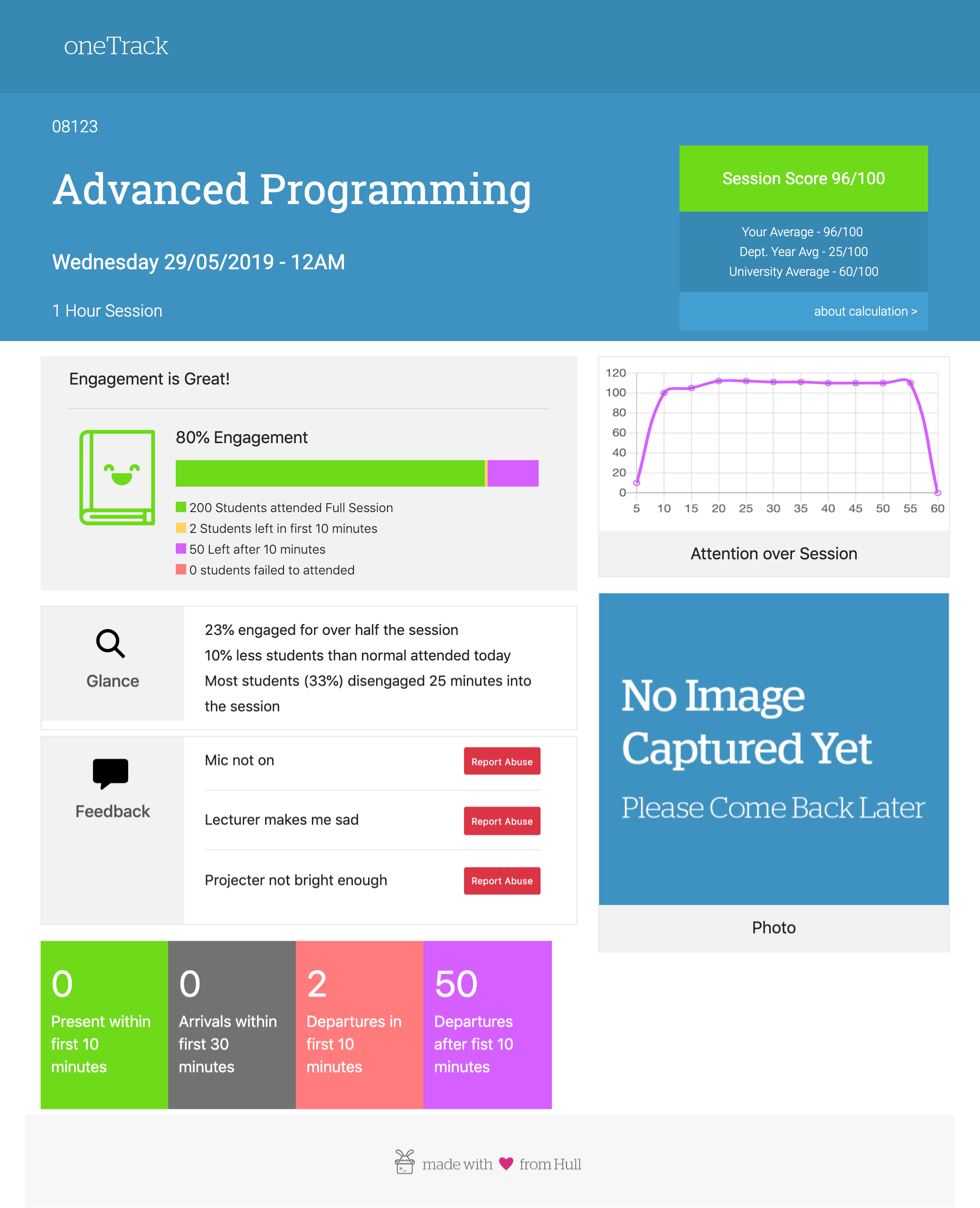

I took responsibility for the frontend, using MVC to show users their teaching sessions, create graphs and diagrams to visualise sessions. By the end of day one, we were happy with how the project was going, and we had our skeleton design and data-processing working correctly. We headed off for our Gala dinner with the conference, after which all the team went back to do some late night coding in Harry’s room.

Day 3: Code faster then pitch

The clock was ticking on day three, only having 3 hours to perfect our application before we pitched it to the judging panel. I worked on data shown in the frontend, Harry and Alex cracked down on data processing errors and Dan fixed data models.

We finished with time to spare, headed out for lunch and then set up ready to present in a trade-floor style location in the University library. The pitch went down well, and there was a lot of interest in the product we made, especially the ethical considerations we had to stop data becoming identifiable, like only recording metadata not images of students and both staff and students alike thought it could be instrumental in their classrooms.

If we were to build this into a real product, we’d link the recordings to institution’s lecture recording software (i.e. Panopto) to allow playback of the session and let the teaching staff directly see where students disengage and reengage.

End Product

We were pleased with the end product that we created, and are planning to open-source it, if anyone wants to take it further. Very kindly, JISC covered our costs for the event - thank you to them for inviting us and giving us the opportunity to come back and work with new teams. You can read more about it on the projects page here.

Detour

At the end of the conference, we were about to head home when we wondered how far Bletchley Park was from Milton Keynes, it turned out to be an 8-minute drive, so we went, and visited the National Museum of Computing there.

We were 20 minutes from Bletchley Park itself closing but had an hour and a half at the museum. In the museum was everything from Colossus (the first large-scale computer) to the Apple II and is certainly worth a trip. Next year we hope to visit Bletchley Park itself as a #hullCSS day-trip.

Video

Harry has also has a blog, which you visit here.

CAN 2019 brand is a partnership between JISC and Open University.